|

To watch the youtube video on this topic (recommended if you've never used the service before), click here.

Introduction: IBM Watson's Visual Recognition Service

We've already done a tutorial on Watson's Tone Analyzer to get us started with using Watson, so to continue learning about Watson, we're going to use his Visual Recognition service in this article.

The Visual Recognition service is actually exactly what the words mean: the service will accept an image and output some classification results of what it thinks the image is, with a certain confidence rating. Additionally, you can train your own classifier to recognize your own images.

Setting Up The Service On IBM's Website

We're going to set up the Visual Recognition service on IBM's website. Sign up for a Bluemix account if you haven't already. (You don't need a credit card, it's an easy signup.)

Next, go to the Visual Recognition page and click the "Get Started Free" button. It should automatically create the project for you. You should see something like this:

Click the "Show" button and copy the API key. Hit the Launch tool button. Now then...

Playing With The Visual Recognition Tool

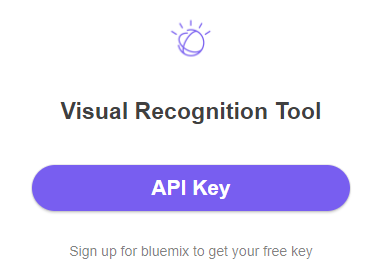

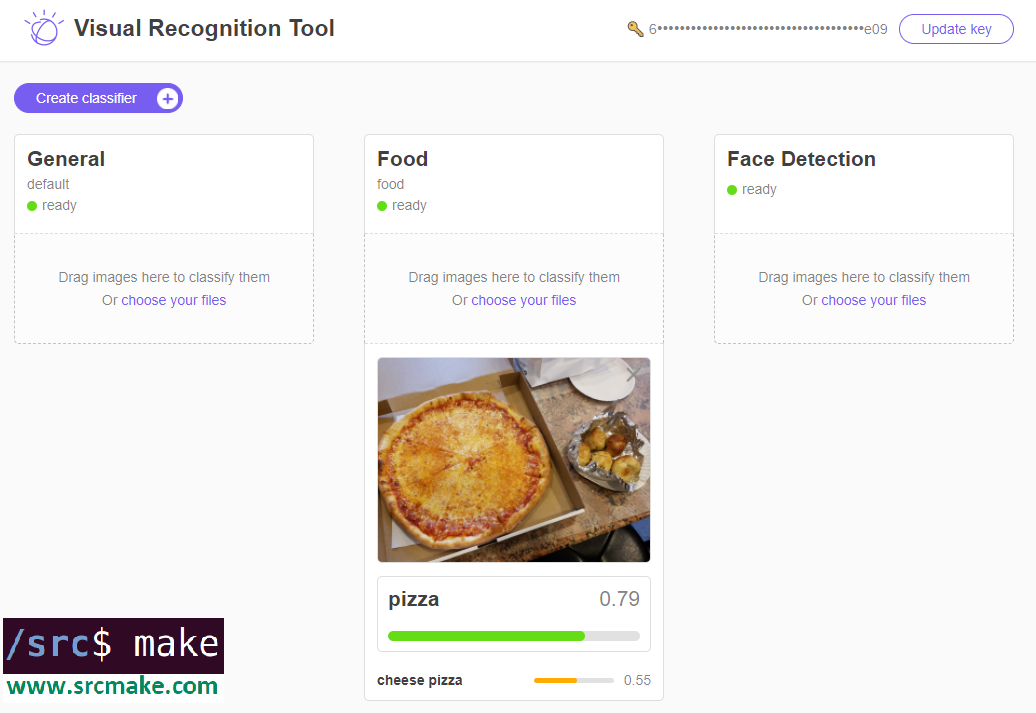

You should see the following page if you launched the Visual Recognition Tool. (If you don't see the page, then click here.)

Click the API key button and enter your API key.

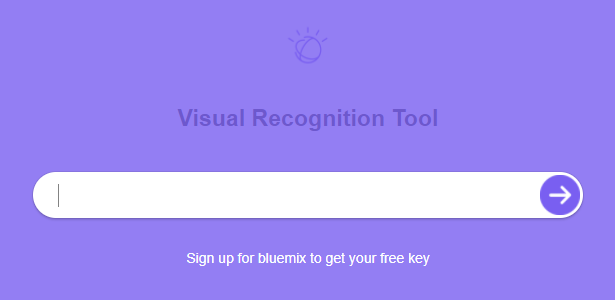

You should see the following if your API key works.

Congrats, your Visual Recognition service is set up on Watson. Sort of. Play with the webpage, by downloading pictures and loading them into the widgets so that you can see how the classification works. Here are three sample pictures, right click -> Save image.

If you drag the pictures into the Visual Recognition service, you'll see something like the following.

As you can see, there are currently three Visual Recognition tools, a General classifier, a Food classifier, and a Face classifier. Feel free to play with the tool a bit more, but...we need to actually write some code now.

Creating A Node Project To Use The Visual Recognition Service

The webpage to use the tool is fun and all, but we need to be able to use this service in our actual code projects. Let's create a basic node project to use the Visual Recognition service.

Since we already created a starter node project for the Tone Analyzer tutorial, we'll just copy the code from github for this project. In your terminal, copy the project (and move into the directory) with the following commands:

git clone https://github.com/srcmake/watson-node-starter cd watson-node-starter

It's just a basic node project with the watson library installed, and a config.js file to store our credentials.

Open the "config.js" file and enter the API key that we got when we created the service on IBM's website.

We need a test image to try and recognize, so download the following image, move it into our project folder, and rename it to "pizza_2.jpg".

Okay, our base Watson node project is set up. Now we need to actually write some test code to run this service. We COULD make API calls to the Visual Recognition endpoint using basic HTTP API requests, but we're going to use Watson's library. You can see the API reference for making the API calls.

In our "index.js", add the following code:

Run the project with the following command.

node index.js

The output should be the following:

Pretty interesting, there are a lot of results. Of course, we used the "General" Visual Recognition tool, not the one for Faces or Food. To try those out (and see how the Visual Recognition API works), check out the API reference documentation.

From this point, we know that we can make API calls using the Watson Visual Recognition service to classify our images. We can extend this code for any program we may need. It's machine learning without actually doing any of the training! However...

Training a Custom Classifier

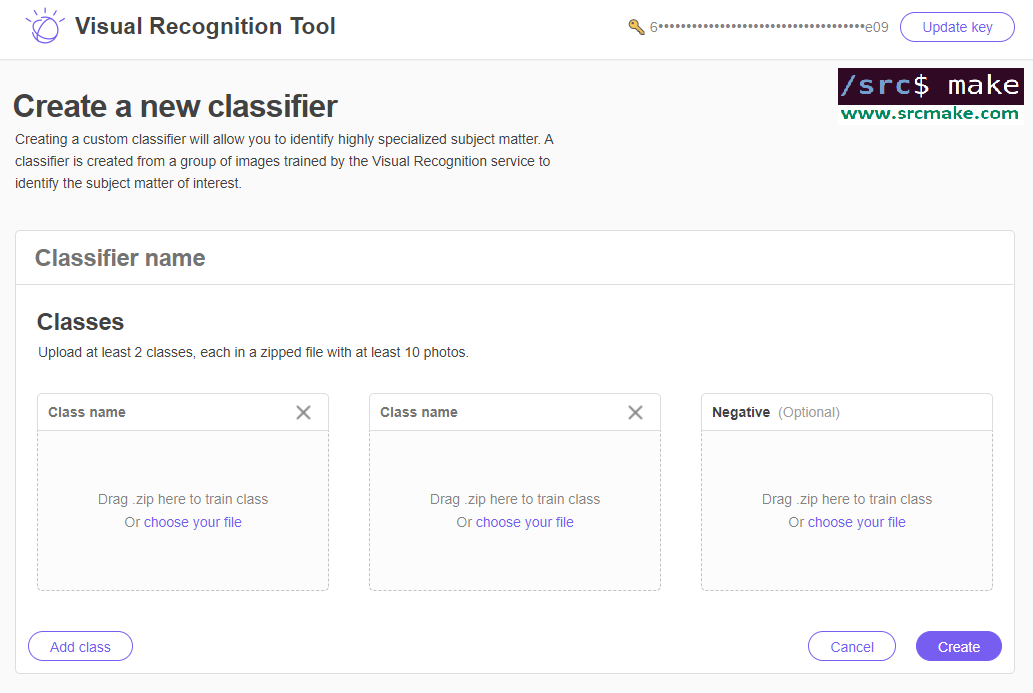

We can use Watson's General, Food, or Face visual recognition services to classify any images that we'd like too, but we can also train our very own classifier using the Visual Recognition tool.

It works like any other machine learning classifier: you feed Watson training data (images) with the correct labels, and Watson learns to classify new images with the labels.

Theoretically, we can use the Visualizer tool to train the classifier.

But let's look at the coding way.

The documentation for this on IBM's website provides a nice example for why we would need this. Perhaps we need to classify between specific dog breeds: in that case, we could feed Watson example pictures of huskies, beagles, and golden retrievers (as well as pictures that are not dogs) so that he could learn to classify dog breeds.

We're going to edit our node project to train a dog classifier. First, we need our training data. We're going to use the dog images provided in IBM's website. Download beagle.zip, husky.zip, golden-retriever.zip, and cats.zip by clicking each of those links. If that doesn't work, then click the links to download them from IBM's documentation page. Add all of the zip files to our project folder.

Next, edit our index.js file to classify the dog breeds. Here's the code to change "index.js" to:

The only thing that changed are the parameters, which names our classifier and the training data files. We also use the "createClassifier" method of visual recognition to create the classifier.

Time to create our classifier. In the terminal:

node index.js

The output will be as follows:

It's a bit boring, but make note of the classifier id. We'll need to call this particular classifier when we want to actually use it.

Using Our Custom Classifier

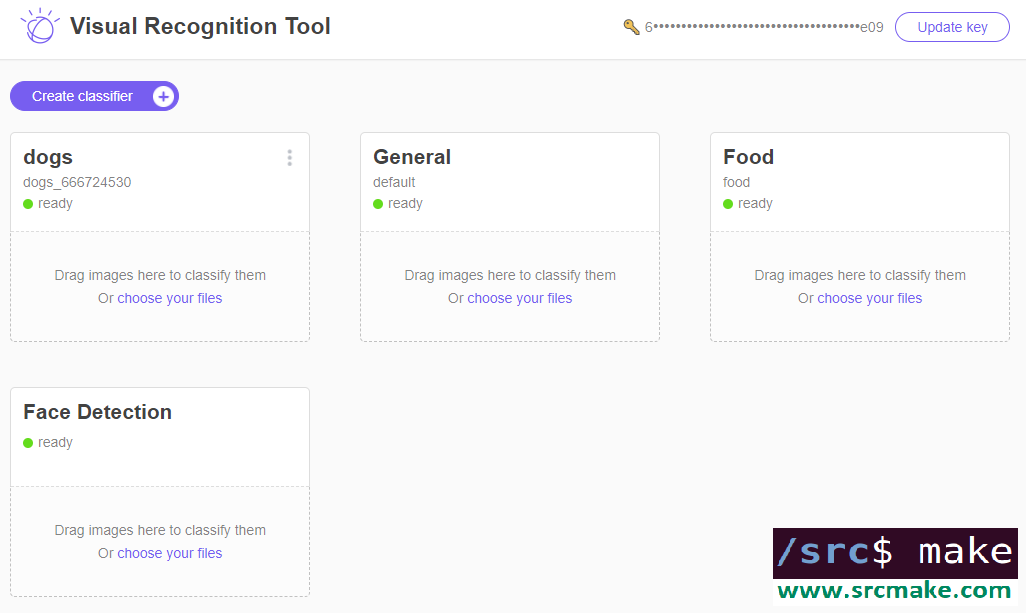

We made a custom classifer, but how do we use it? Well...we can go back to the webpage for the Visual Recognition demo, enter our API key, and we'd see that our classifier now shows up.

You can test it out there, although most likely you'll want to access the classifier using a program.

Luckily, the Watson library is going to help us with this. Actually, it's literally the same code that we used before to classify an image. Just uncomment line 18 and add your own classifier id. (And change the test picture name.) I won't be typing this out since we just covered it, but in the video I will go over this, so watch the video if you want to see me use the custom classifier in our Node project. Conclusion

We did a lot, but now you can use Watson's Visual Recognition service to classify images. You're also able to use it to train your own data and make your own classifier. Of course, we went over a lot of Node example code, but you can take that code and spin it off for your own personal project. You can also write this code in Java or Python, just look at the references to see IBM's example code. (Although obviously, the code we wrote is wayyyy better.)

The video for this topic, where I go over all the steps, is found below.

Like this content and want more? Feel free to look around and find another blog post that interests you. You can also contact me through one of the various social media channels.

Twitter: @srcmake Discord: srcmake#3644 Youtube: srcmake Twitch: www.twitch.tv/srcmake Github: srcmake

References:

1. www.ibm.com/watson/services/visual-recognition/ 2. console.bluemix.net/docs/services/visual-recognition/getting-started.html#getting-started-tutorial 3. www.ibm.com/watson/developercloud/visual-recognition/api/v3/ 4. console.bluemix.net/docs/services/visual-recognition/tutorial-custom-classifier.html#creating-a-custom-classifier Comments are closed.

|

AuthorHi, I'm srcmake. I play video games and develop software. Pro-tip: Click the "DIRECTORY" button in the menu to find a list of blog posts.

License: All code and instructions are provided under the MIT License.

|